How to Realize Deduplication

The type of data deduplication usage in question, the database deduplication suppliers utilized, and the user’s data protection objectives will all influence the optimal manner to apply data deduplication technology. An independent deduplicated data software’s implementation method is different from that of a backup deduplication equipment or storage system, which frequently incorporates deduplication technology.

On the other hand, document deduplication technology is typically implemented at the source or the target. Here, the distinctions lie not only in the location of the deduplicating data process but also in the timing of it: either before or after the data is already stored in the backup system.

Effective Techniques of Deduplication

Inline and additional processing of database deduplication are the primary techniques for removing duplicated data. The approach will be determined by the backup environment. Data deduplication works by inline deduplication as it is ingested by a backup system. While the data was created to backup storage, redundancies were eliminated. Although less backup storage is needed with inline dedupe, bottlenecks may result. For high performance main storage, storage array suppliers advise users to disable their inline duplication of data techniques.

An asynchronous backup technique called post-processing dedupe database eliminates redundant data once it is written to storage. A reference to the block’s initial iteration is used in place of deduplicated data. With the post-processing method, customers can rapidly restore the latest backup without dehydrating and dedupe particular workloads. A higher backup memory capacity than what inline deduplication requires is the trade off.

Required Elements of a Deduplication Solution

Here are the most important required elements of a deduplication solution that helps identify duplicate elements:

Scalability

Because procedures that degrade performance are hard to scale, scalability and performance go hand in hand. This also holds for deduplicated data: the harder it is to speed up a process as needed, the more resource intensive it is. Businesses that have a wide range of scalability requirements need to weigh these trade offs when selecting a deduplication solution to eliminate duplicate data.

Performance

Different resources are needed for different kinds of dedupe database deduplication. When it comes to deduplication, block-level deduplication carried out at the source on a vast network would consume a lot of resources in contrast to file-level deduplication carried out at the target with a more constrained scope.

Cost

The cost of data deduplicating technologies varies according to parameters of file system like capability and complexity. The amount that is charged goes up as more records are processed. Based on quoted rates and industry standards, organizations should evaluate their budgets and determine how long-term savings offset them.

Integration

Disjointed data sources may make deduplication more difficult. For instance, duplicate data is far more likely to occur in databases that are housed in silos. In other situations, a more rigorous purification and transformation protocol before deduplication can be necessary for a sizable network with several remote sites. Companies contemplating the deduplication of data must evaluate the current status of their data integration.

What is Target Deduplication?

Target-based database deduplication became the initial entrant into the disk-based backup market, and deduplication was the crucial turning point innovation that made it possible. One effective method to lessen the quantity of duplication of data on the target or destination device is target deduplication. Target deduplication reduces redundant information in data backup processes, increasing the availability of backup storage. Hardware or software designed specifically for the desired purpose can be used to accomplish target deduplication.

Target deduplication is mainly used as a supplementary or backup database deduplication approach. Target deduplication usually takes effect once the data arrives at the intended storage device. Once the inline deduplication reaches its goal, it can be completed before or following the device’s data is backed up and secured. Target deduplication block level deduplication from the consumer device, but receiving the redundant data from it demands more bandwidth. Intelligent disk transfer (IDT), virtual table libraries (VTL), and LAN B2D machines and appliances all use it.

How Real-Time Deduplication Problem-Solving Works

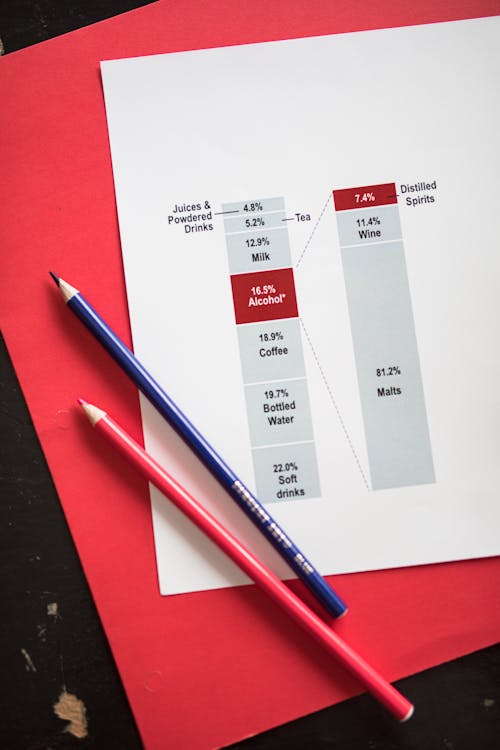

Imagine a business manager sending 500 copies of a single 1 MB file, a graphic-rich financial forecast report, to every member of the team. The 500 copies of data are currently being kept on the company’s email server. The 500 copies are saved if every email inbox has a data backup system, using up to 500 MB of server space. A rudimentary file-level data duplication mechanism would only store one report instance. All other references simply point to that one saved copy. This indicates that the unique data will only require 1 MB of bandwidth and storage on the server.

Another illustration is what occurs when businesses periodically execute full backups owing to enduring backup system design issues and do full-file incremental copies of files where just a small number of redundant data or bytes have changed. Eight weekly full backups on a 10 TB file server would generate 800 TB of backups, with an additional 8 TB or so of incremental backups over the same period. This 808 TB can be reduced to less than 100 TB with a strong post process deduplication technology, all without affecting restoration performance.

What is Data Storage?

For good reason, backups produce duplicate versions of files. That being said, it is not necessary to duplicate the same storage data deduplication file indefinitely. Database deduplication guarantees that a clean backup file is there instead, with additional instances in updated block level backup versions only linking to the original file. In addition to conserving resources and storage space, this enables redundancy.

Since both archival systems and backups serve the purpose of long-term data preservation, they are sometimes misunderstood. Organizations employ archive systems to store data that is no longer in active use, whereas systems provide backups for disaster recovery and preparedness. When merging storage volumes or expanding an archive system with new segments, duplicates could be produced. Archive efficiency is maximized through the dedupe database procedure.

What is Source Deduplication?

Source deduplication is the process of doing database deduplication near the data creation location where data is stored. The data deduplication services usually takes place inside the file system when using source deduplication. New files are scanned by the file system itself. It generates hashes for every file it scans in the process. After that, these are contrasted with the pre-existing hashes. The copy is erased and the updated file is made to point to the original, or older, file if a match is discovered.

But since duplicate files are treated as separate entities, a copy of each one is made in case one of them is later changed. Additionally, duplicates may be created during the backup process of the deduplication system itself.

What is Data Duplication?

Primary data protection sets can be deduped, however backup and supplementary storage is where data deduplication first appeared. Optimizing the capacity and performance of flash storage is especially beneficial. Primary storage deduplication happens because of the operating system software or storage hardware.

Dedupe database techniques are promising for cloud service providers looking to save costs. Because they can deduplicate the data they hold, they can reduce the amount of disk space and bandwidth needed for off-site replication.

Bottom line

Efficiently managing product database deduplication is essential for smooth data processing and enhanced productivity. You can utilize effective data deduplication in addition to using advanced management tools to optimize your data usage and processing, for a better experience. You can contact us at Pimberly to get your hands on the best services in town for management tools.